PureSource™

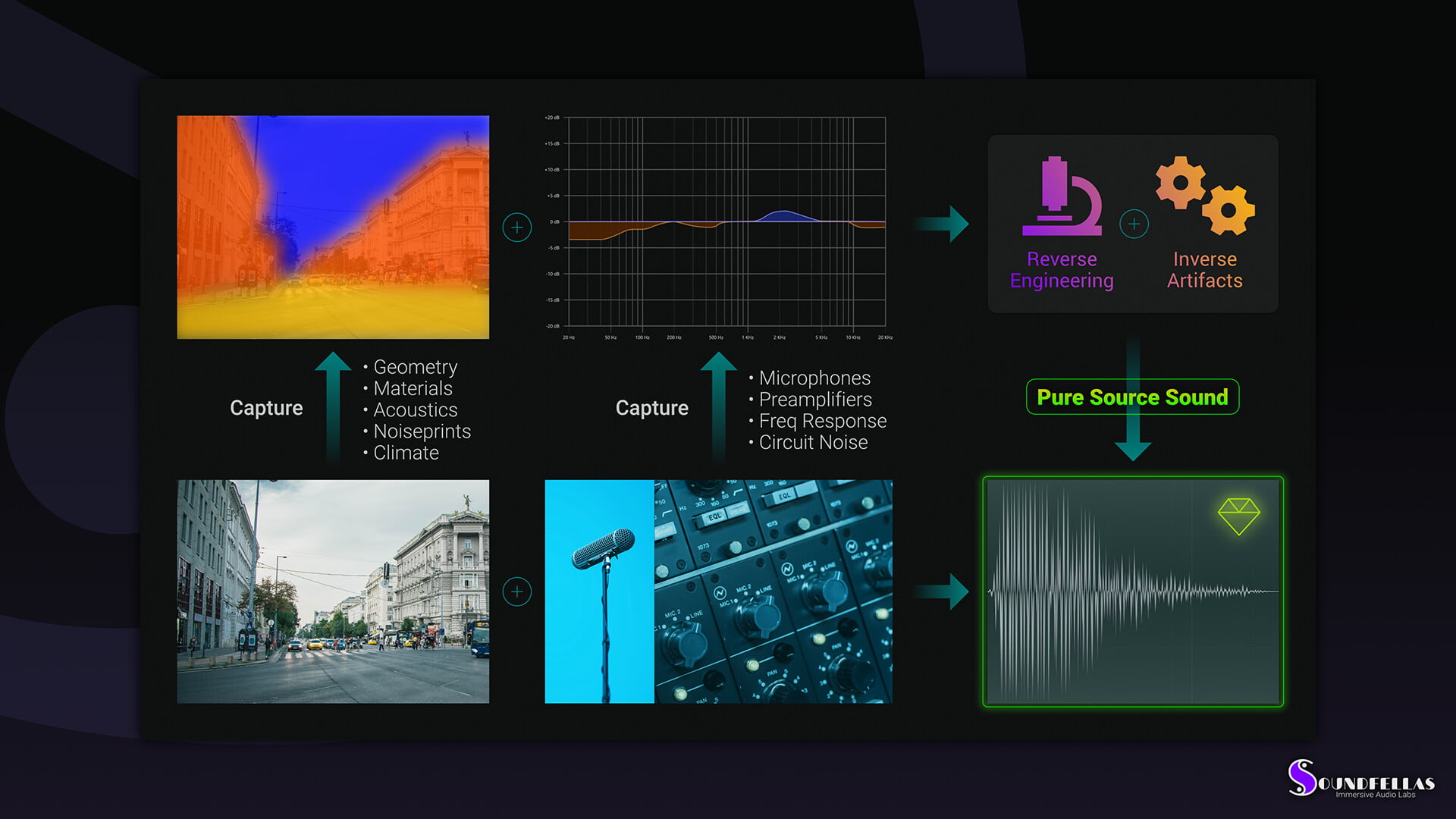

We dereverberate our recordings to get them as anechoic as they get. We also flatten any coloring added by microphones, preamps, etc. Revealing the source’s pure sound means better integration with reverb processors, occlusion filters, 3D audio engines, and a more immersive audio experience for the end user

Table of Contents

Introduction

What is PureSource™

PureSource™ is our ingestion process which we use with all the sounds that we record.

During PureSource™ and depending on the material, we use various signal processing techniques from sound restoration and forensic audio, with the addition of reverse engineering, to clear the recorded source’s sound in its purest form.

That means removing any acoustic reverb that was captured in the location of the recording, audio equipment artifacts, and even the Doppler effect if the source was passing by at the time of the recording.

Why we created PureSource™

Audio production is heavily based on virtual acoustics.

Traditional media like music, games, and film, as well as contemporary media like virtual reality, augmented reality, and mixed reality, all use virtual acoustics to create the sound of the scenes in the story or experience.

In those media, virtual acoustic algorithms simulate reflections (reverb processors), occlusion (equalizers), and distance damping (filters linked with volume and late reflections modeling).

In music and film, the acoustics simulation happens in the studio, while in games and VR, it’s achieved in real-time by the game engine or an interactive audio middleware framework.

When sound is recorded, even in controlled environments like the studio, all of the acoustics tend to get captured together with the sound of the source, and recording equipment adds artifacts. A recording studio is not an anechoic chamber.

Sounds that were recorded and contain any of those artifacts, even in the smallest quantity, and are combined with the virtual acoustics of your game levels, film scene, or VR space, fail to produce the maximum consistency with what the listener’s brain expects to hear.

This breaks the suspension of disbelief and ends immersion, not to mention that your audience perceives the quality of your creation as lower than it should be.

Furthermore, when moving the sounds around in the virtual space, sounds that are not pure enough don’t spatialize so well, meaning that the listener cannot perceive their exact location. Sound localization is an essential element of the sound experience in any media, and you can imagine how much of the experience depends on it in games, films, and VR.

We wanted a production process that would enable us to produce timeless audio assets that spatialize well and sound realistic in any project and playback scenario. So we created PureSource™ to be able to deliver the purest source sounds in the market.

PureSource™ content common use cases

The principle behind PureSource™ is to produce sound assets that contain the source’s sound in its purest form so that it matches both the virtual acoustics of a project and the psychoacoustics of the listener.

Here are some cases in that PureSource™ produced audio assets shine:

- Use any algorithmic or convolution reverb. The anechoic characteristics of any PureSource™ derived asset will make the sound more realistic.

- Boost your productivity as you don’t need to clear any artifacts from the audio assets yourself: no reverberation, irrelevant elements, or unwanted frequency response distortions to correct. Just drag and drop them into your project and focus on creativity.

- Do you work in games? Great, by using PureSource™ derived audio assets, together with the contemporary audio capabilities of game engines, you can truly create believable worlds.

- Do you work in film? Awesome, any assets processed using PureSource™ are going to fit in your scenes right from the import.

- Virtual reality and any other digital reality (VR/AR/MR/XR). PureSource™ derived sounds play nice with specialization, ambisonics, and any immersive audio solution you use to create your experiences.

Of course, those are some common-use scenarios. But apart from those, any project that needs believable sound that immerses the audience and spatializes as good as possible will benefit from the use of sounds produced using PureSource™.