HDR Audio

Our end-to-end high dynamic range pipeline yields crystal clear sound with more detail, punchier attacks, and smoother fades, that sound great across any playback scenario.

Our end-to-end high dynamic range pipeline yields crystal clear sound with more detail, punchier attacks, and smoother fades, that sound great across any playback scenario.

Our production pipeline is calibrated to high standards that follow scientific laboratory practices, so we can offer you crystal-clear assets and services of the highest possible fidelity.

Having a high dynamic range allow us to keep all the subtle details that natural sound event hide inside them. The end result is sound with character that is natural and realistic.

All our processes are tuned to produce production ready assets, containing realistic details that cut through the mix. Our rendering process gives you production ready elements, so you focus on your creativity.

Having an end-to-end high dynamic range production pipeline is important for keeping the subtle nuances of sound that deliver a detailed character to the listener’s brain.

By utilizing cutting-edge technologies throughout our pipeline, we capture, process, and deliver, superior audio assets full of detail and huge dynamic range for you to manipulate even further.

You get sound effects that cut through the mix, ambient soundscape loops so detailed that you feel like you’re in the scene, and music tracks with clear dynamic contrast. Drag them into your project and start with great sound right away.

On our complete production pipeline, from recording and synthesis to processing and rendering, we use 32-bit floating point (IEEE) raw PCM data stored in ITU RF64 wave files. This resolution gives us a dynamic range of 1528 dB.

To put you in perspective 16-bit gives 96 dB of DR (dynamic range) and 24-bit gives 144 dB DR, while simple integer 32-bit gives 192 dB DR.

And to better understand sound levels:

On top of a high resolution file format, we use an even higher dynamic range for our tool-set. All our software tools feature an end-to-end 64-bit floating point calculation pipeline.

By combining cutting-edge audio technologies with high resolution data we have more than 1528 dB to play with our sound design and no fear of clipping or losing details in the process. So whatever sound mangling and processing our designs require, we always have a very detailed result with enough contrast between all the nuances of the sound.

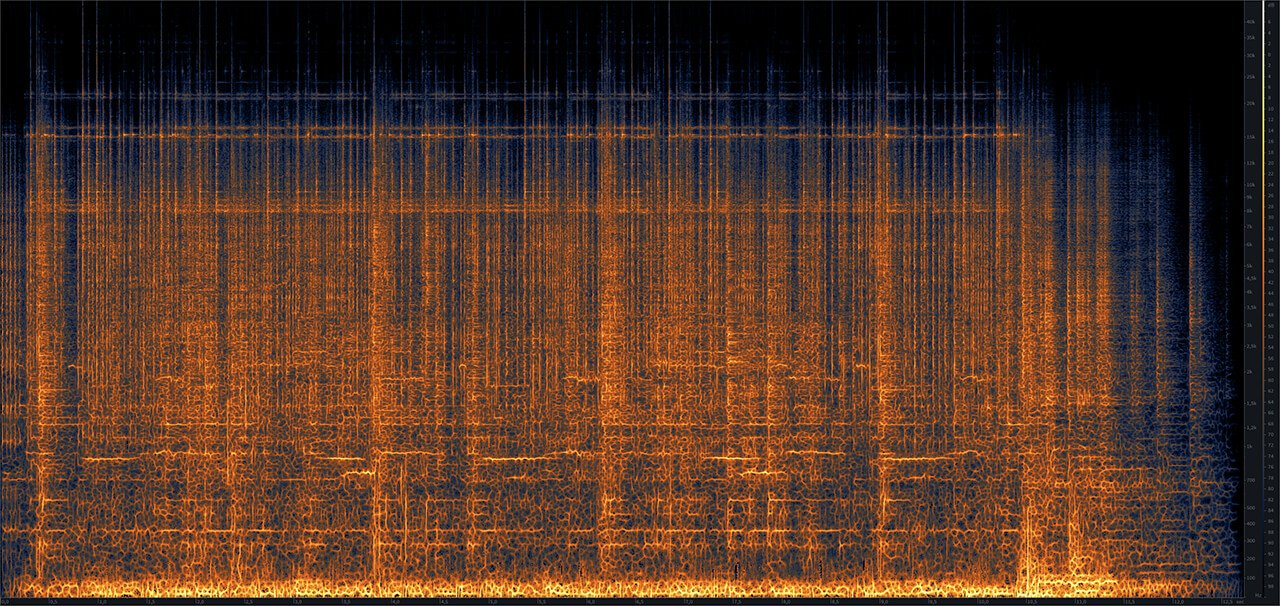

This image shows the intricate details that hide in a sound event, revealed only when high dynamic range techniques are properly applied throughout the production process.

In combination of having a high bit depth production line, we also use very high sampling resolution in our work.

Our base sampling rate might be 96 KHz, but on top of that we use oversampling, from 4x and up to 512x. That means that when needed we are working with sampling resolutions up to almost 50 MHz. Yes you read correctly, that’s 50 Megahertz. We use a render farm with modern CPUs and we still need days to render one of our sound libraries.

Working with such high resolutions comes with big cost in hardware and production time, and needs a careful approach for each type of sound to not produce artifacts, but the benefit is that it produces crystal-clear sounds. It’s the equivalent of rendering 3D animation or VFX compositing using the best data range to preserve every detail you worked hard to achieve, and then you can convert it to whatever target you like.

Oversampling is also another method to further increase the dynamic range of PCM audio. Using oversampling means to multiply the working sampling rate (time resolution) of the audio. As we assume that quantization error is uniformly distributed with frequency, much of the quantization noise is shifted to very high frequencies.

Another reason that we use oversampling is because processes like distortion, harmonizing, compressing, etc., generate higher harmonics from the original material that exceed the Nyquist frequency (a limit of sampling at half of the sampling rate). Those frequencies are mirrored back below the Nyquist frequency and are known as alias frequencies, and the phenomenon is known as aliasing. If left untreated, aliasing is considered a bad artifact because its content is not harmonic and it sounds as an unnatural digital noise.

After we finish processing our audio material we reverse the oversampling and return the signal to is previous (default) sampling frequency, effectively removing all the artifacts of processing and together the quantization error that we shifted higher on the earlier stage. That way we achieve a crystal clear (free from aliasing) signal with less quantization error (more dynamic range) for all our sound libraries and for the audio we create for our clients.

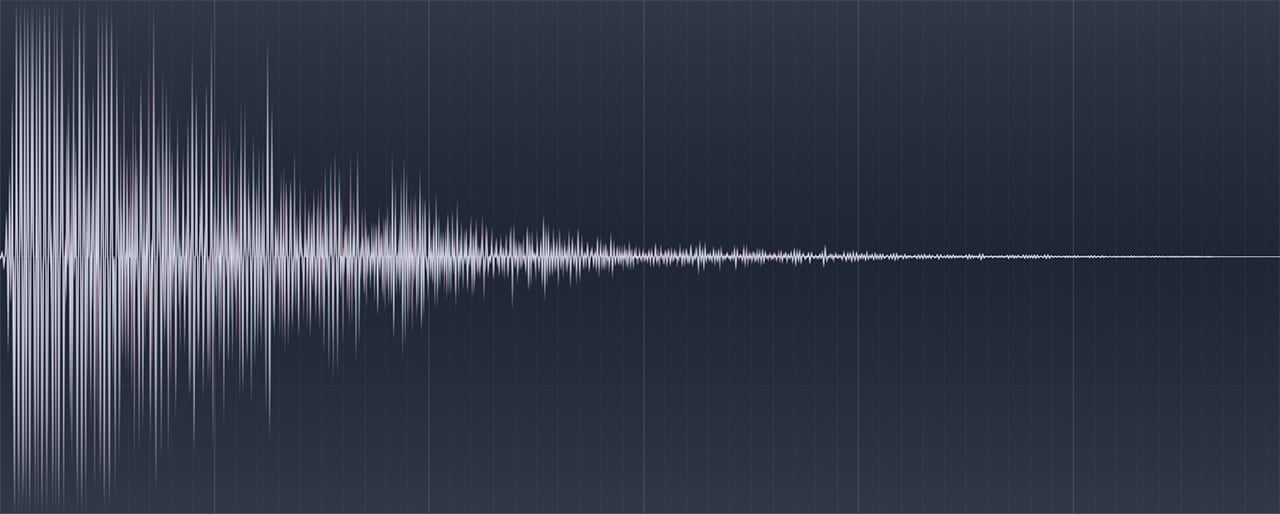

Just like music, a sound event can have silent moments and big pompous ones, the same goes for ambient soundscapes too. The image above shows one of our sound effects, starting fast to a loud peak and then slowly retreating to the most silent of vibrations. A high dynamic range pipeline is needed to correctly preserve those great differences, and produce the right contrast between the micro-events of the sound.

Setting up a rendering pipeline is an ongoing process, it’s not as simple as setting a bunch of numbers on a render dialog box, no.

In our world, old technologies get upgraded and new technologies emerge, and their combination creates an ever-changing landscape. We strive to keep up in harmony with those changes so you always get the best from us.

Choosing output formats and rendering settings requires thorough testing of the next stages that our audio assets will be used. How does the Unreal engine decodes and encodes sound for different target platforms? When the Unity engine decodes Ogg files to Wav, does that introduces quantization noise even if we use 24-bit audio settings for the Unity editor? If a customer uses Audacity to create new sounds from our assets, how we make sure she gets the best possible results? What will happen if our customer uses dither on his monitoring chain? Those are some of the many questions one needs to answer before choosing render settings, and as you see, the answer changes according to how authoring applications and technologies evolve.

For the tech-heads here, here’s what we currently practice…

The file formats we export depend on the retail channel that we supply, that’s because each vendor has its own restrictions and specifications which we have to comply.

For our own store we use 96 KHz 24-bit raw PCM wave files, which are the industry standard for audio assets in production stage.

The assets we sell through the Unity Asset Store are rendered in Ogg Vorbis and FLAC file formats, and we use 48 KHz 24-bit resolution which is high enough for any use in games and conforms with the asset size requirements of the Unity Asset Store. When you buy one version you also get access to the other, and we recommend using the FLAC version if you use a Unity engine version from v2020.x and upwards.

As we convert our assets to 24-bit at the end, due to the compatibility with all applications and the high dynamic range that it features, we use dithering and noise shaping.

We dither our renders with TPDF (Triangular Probability Density Function) that uses the PRVHash (Pseudo-Random-Value Hash) generator by Aleksey Vaneev. This is one of the cutting-edge algorithms to create dither noise and it’s the most neutral we ever heard (decision was made by double-blind critical listening tests).

To top it all up, we also apply a complex psychoacoustically enhanced noise shaping filter for the dither noise, which increases the apparent signal-to-noise ratio of the signal. To minimize the probability of perceiving the dither noise, we base the shape of the noise shaping filter on an “absolute threshold of hearing” model which also dynamically adapting its curve according to the audio material that passes through the dither, making it practically undetectable, while it keeps the perceived dynamic range higher than the bit-depth alone would allow.

On the topic of dither, we have a surprise that audio-nerds will love. We also dither before we convert to lossy formats like when we render to Ogg Vorbis files for the Unity Asset Store. That might sound redundant, but when you download and use the assets within the Unity editor, they get converted back to PCM data in the back, so dither takes care of any problems that would arise from quantization errors at that stage. We take care of the details, you focus on your creativity.

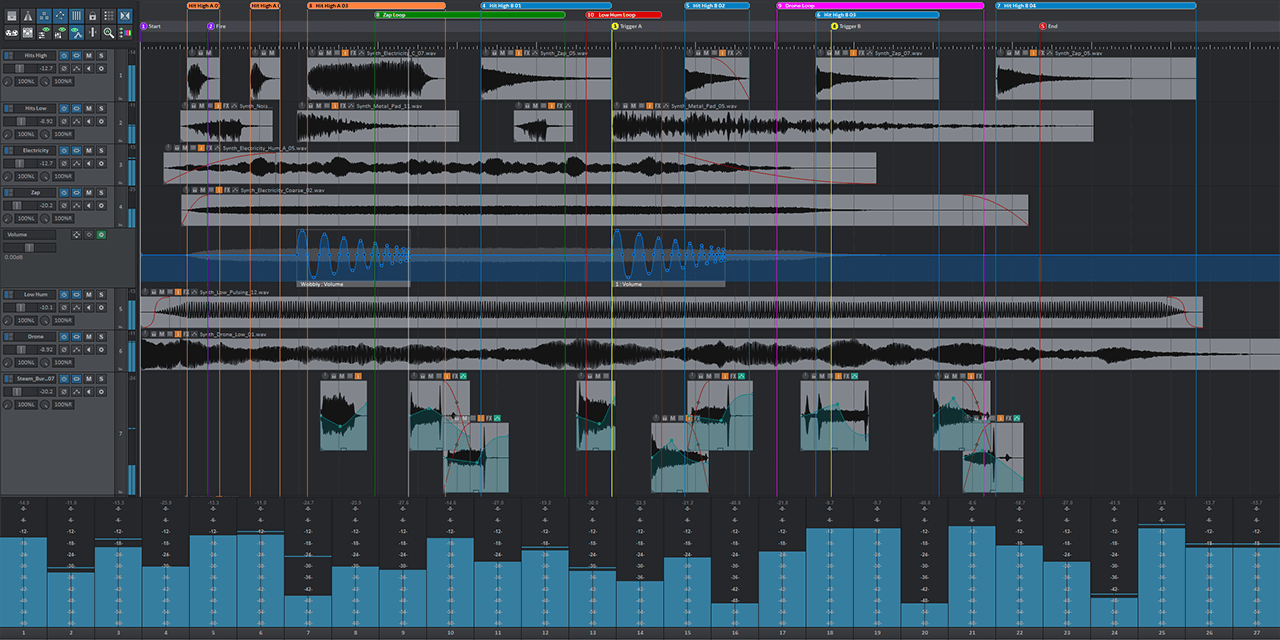

As you can see in the image above, compositing a complex sound event takes many elements, mixed with intricate smoothing between them, and uses a lot of layers with heavy processing. Having a high dynamic range pipeline is crucial to creating and delivering high-fidelity audio for our sound libraries and bespoke projects.

Number are numbers, using huge files and setting your equipment to big numbers doesn’t necessarily mean that the content is of high quality, or fidelity for that matter.

High dynamic range audio would mean nothing if the audio content we create wasn’t detailed enough to need it.

At SoundFellas we always spend time setting our pipelines and equipment to laboratory specifications, and when we choose a setting or a format, we know exactly the reason we do it.

Each technical decision we take from the beginning of audio capture, to processing, and to finalization and rendering to distribution formats, is made with you, the customer, in our minds. Our only mission is to provide you with the best audio material for your projects, so you can focus on what matters most, your creation, your gift to the world.

* We don’t spam and we don’t use your email for anything else than to inform you when we share knowledge or we have an update.

* All emails feature a link for you to unsubscribe at any time.

* By subscribing you agree to our terms of use and privacy policy.

A MediaFlake Ltd Brand

41 Agiou Dimitriou, 18546

Athens, Greece

EU VAT ID: EL997735980

Business Reg.: 277317

Learn more about SoundFellas

©2011 SoundFellas Immersive Audio Labs, All Rights Reserved.

* We don’t spam and we don’t use your email for anything else than to inform you when we share knowledge or we have an update.

* All emails feature a link for you to unsubscribe at any time.

* By subscribing you agree to our terms of use and privacy policy.

A MediaFlake Ltd Brand

41 Agiou Dimitriou, 18546

Athens, Greece

EU VAT ID: EL997735980

Business Reg.: 277317

Learn more about SoundFellas

©2021 SoundFellas Immersive Audio Labs, All Rights Reserved.