AuthorNorm™

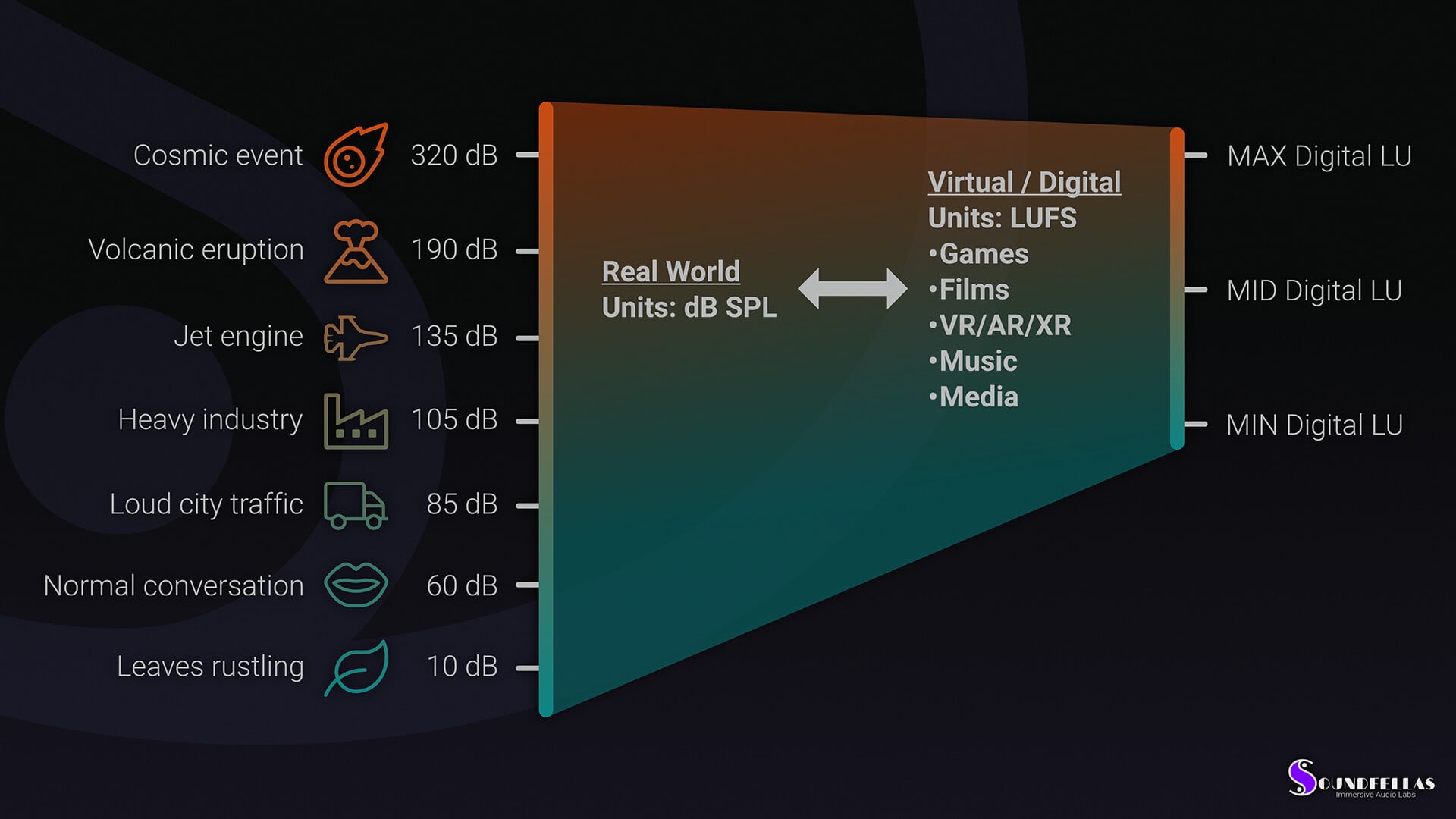

AuthorNorm™ is a perceived loudness normalization protocol that fuses the natural behavior of sound in nature and how we use sound in digital media authoring. Designed to normalize audio assets used in the production and post-production stages, it’s perfect for any media authoring work.

Table of Contents

Introduction

What is AuthorNorm™

AuthorNorm™ is the SoundFellas approach to loudness control from the early stages of pre-production and into production.

AuthorNorm™ is a protocol that can be used in production or as audio normalization logic in audio processors and software tools.

What was the problem?

We developed AuthorNorm™ because after creating and processing millions of audio files, we found out that there was no solution for normalizing audio for use in the authoring stage of any production.

Sure, there are many ways to measure and normalize sounds, RMS, ITU-1770, Peak, True-Peak, and many more. But in production, the creator needs speed and intuitiveness, and all the ways mentioned above either don’t do a good job normalizing sounds with different duration or different timbre types (tonal/noisy), or they destroy the naturality of the sound by moving it far away from its natural level.

Our solution

What we did, was to measure different classes of sounds used in entertainment media (film, games, virtual reality, etc.) or audio tools (music instrument plugins, samplers, etc.) and found the best way to master the loudness of each class, incorporating some different methods taken from measurements in the real world and our research in psychoacoustics.

The protocol and methods we created were developed into AuthorNorm™, a way to master the perceived loudness of sound assets to be used in authoring workflows. Using sounds mastered using AuthorNorm™, a game developer or a filmmaker feels the natural loudness of the sounds they import into their projects. The assets need less mixing as they closely match the levels they should have in the final product.

Software developers, industrial audio designers, and audio plugin developers can create tools and embedded systems that perform naturally with less effort and without the need to pre-master their sound assets before importing them into their projects.

Think about it, by normalizing everything to the same loudness level, a quiet wind breeze would sound like a spaceship’s engine, requiring more work to balance it in the mix.

We use different pipelines for each sound class.

Ambience loops are normalized by mapping them to a proprietary digital scale derived from measurements made in the real world so they sound natural right out of the box.

Sound effects and Foley are normalized to a standard level that makes them easy to cut through a proper mix and, simultaneously, avoid unwanted distortions that make everything sound the same and compromise character.

Music is normalized to a standard level that most commercial music soundtracks are produced.

Dialog is normalized to a standard level that makes it easy to mix together with complex content but at the same time protects intelligibility, prosody, and drama.

- Impulse responses are normalized to different standard levels based on their acoustic or psychoacoustic simulation intent.

AuthorNorm™ is a proprietary perceived loudness normalization protocol, and it’s the perfect way to normalize audio assets for fast ingestion and easy mixing in game engines, video editors, digital audio workstations, virtual music instruments and samplers, and any other authoring application.

The fine control of dynamics

Proper dynamic range control

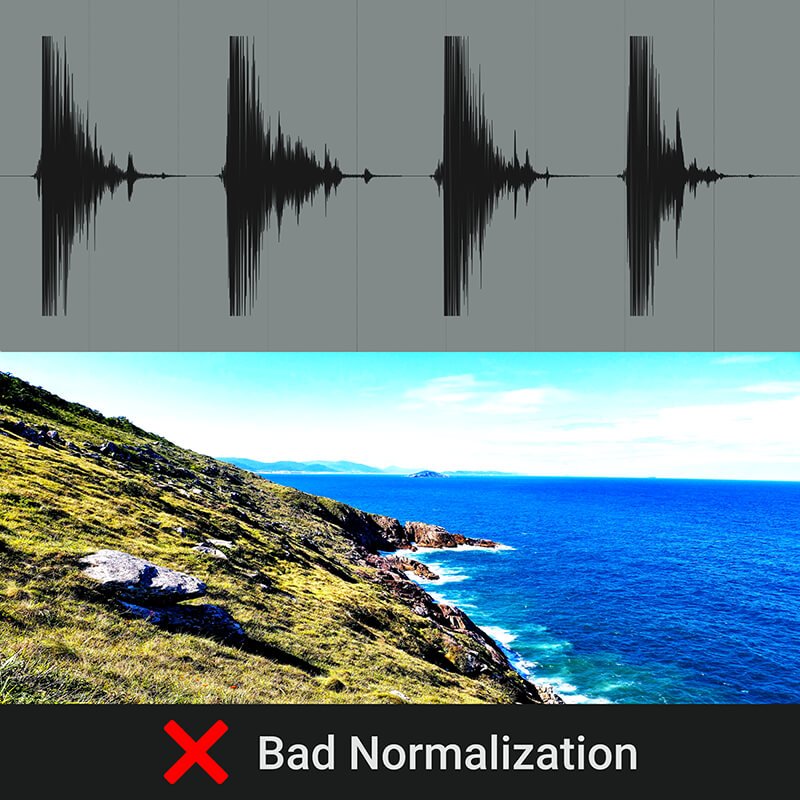

While normalizing an audio sample seems an easy task, there’s more than meets the eye.

If you normalize in peak levels, your final samples will not be perceived as equal in loudness because of how human hearing works. And when you normalize based on perceived loudness, the peak levels might be too loud and get clipped, producing non-harmonic distortion (the wrong kind that you don’t want).

Here’s an example of what is happening with careless normalization when content producers try to normalize loudness and peak without using any protocol. An image below the waveform shows the visual equivalent.

Typical careless normalization

Content sounds (looks) cool in the first audition (look), but:

- Dynamic range is clipped.

- The original detail is lost forever, and fidelity is compromised.

- Asset already has a “style” baked from the start, restricting creative freedom.

In visuals, it would be the equivalent of using a fake HDR special effect on overexposed footage before importing it into your project, resulting in an aesthetically displeasing image with distortions and color banding.

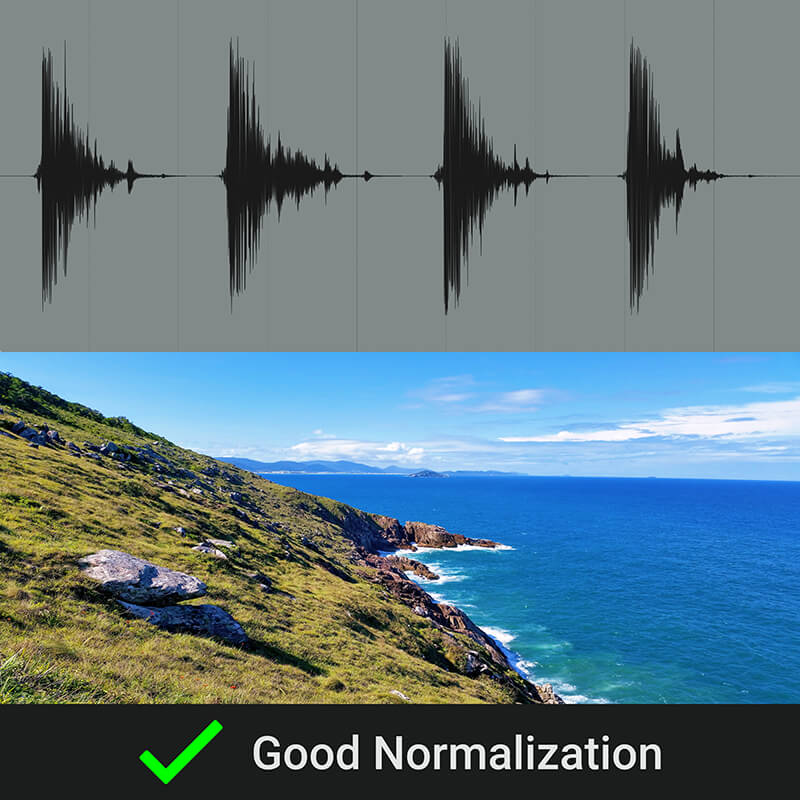

SoundFellas normalization

Content sounds ok in the first audition (look), but:

- The asset has a full dynamic range to support further processing/styling.

- The original fidelity is preserved in the best possible way.

- Asset’s styling is neutral and natural, allowing for further styling and high-fidelity final rendering.

In visuals, it would be the same as using footage from a Log shooting profile capable camera with proper white balance and lighting.

Battle tested in the hands of creators

Experimenting in real production environments

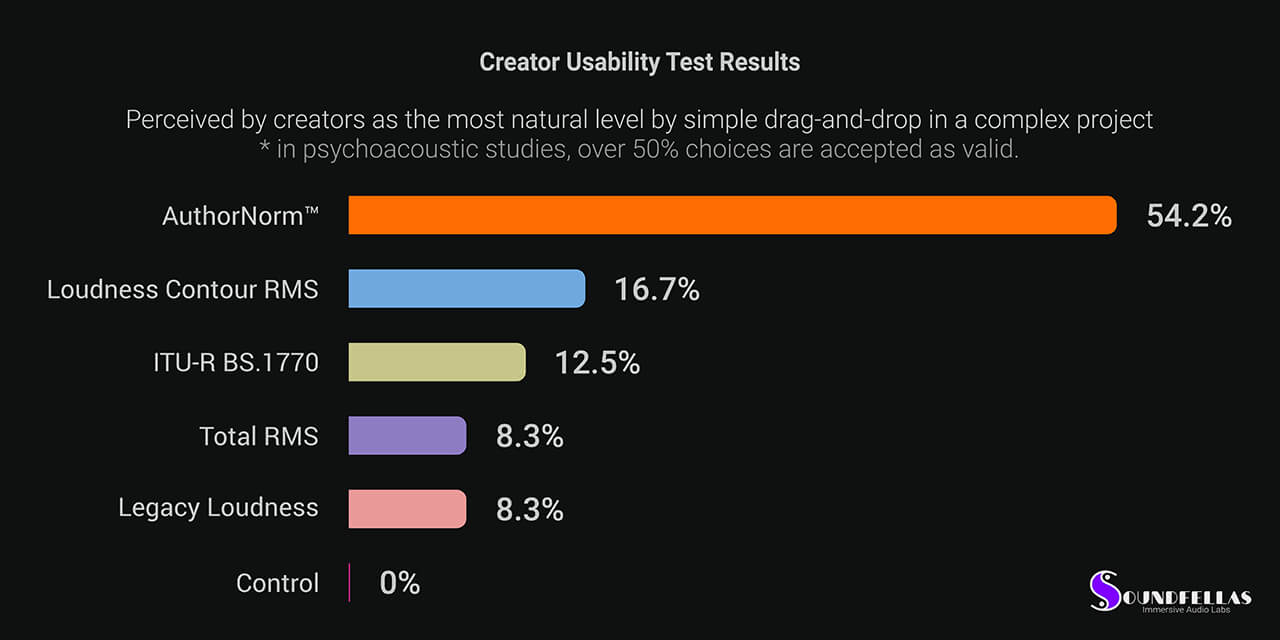

To develop our AuthorNorm™ normalization protocol, we conducted our scientific experiments set in contemporary media authoring environments, using professional media producers as our test subjects.

The subjects were given a library of mixed audio assets (ambience and music loops, sound effects, Foley, and dialog) normalized using various common philosophies:

- Total RMS: Many producers use simple RMS metering because it gives more natural values than simple peak metering.

- Loudness Contour RMS: RMS metering weighted with the Equal-Loudness Contour curve (ISO 226:2003 revision of the Fletcher–Munson study). Infrequently used by more tech-savvy producers.

- Legacy Loudness: Common RMS windows weighted using A-Weighting or ITU-R 468 curves, used by some older audio editor applications and producers.

- ITU-R BS.1770: The latest perceived loudness measuring recommendation.

Together with the rest of the library versions, the subjects were also given a library version without any perceived loudness normalization but normalized using a true peak value of -2 dBfsTP, which is a standard to avoid clipping. This was used as the control because the nature of a mixed library of sounds should never feel natural if everything is normalized at a standard peak value.

Finally, the subjects were given a version of the mixed sound library normalized using our AuthorNorm™ protocol.

All the above library versions were titled using random numbers to ensure that the tests would be double-blind, so the subject and the experiment’s supervisor didn’t know which was which.

To test AuthorNorm™ under actual production conditions, each test subject was given a complex project and was asked to use the different versions of the given library in that project by dragging and dropping the audio assets from the library to the project.

The subjects had complete freedom to switch library versions and take notes and no time restriction.

Ultimately, the test subjects had to vote on which library version was the easiest to ingest and mix right from the drag-and-drop point.

The results

Due to the nature of hearing and psychology, in psychoacoustic studies using choice tests, a result greater than 50% is considered a valid hypothesis.

The results speak for their own, as more than 54% of the creators that were subject to our experiments, have chosen AuthorNorm™ as their favorite sound library normalization protocol for production and post-production creative workflows.

Who can benefit from AuthorNorm™

Sound library users

If you use our Sound Libraries, then you are in luck, all our sound libraries were updated by mastering them using AuthorNorm™, so that they are easy to use in your projects right out of the box.

Echotopia and DMDJ users

Game developers, filmmakers, and videographers

Game developers, filmmakers, and videographers can use our Sound Libraries that feature AuthorNorm™, or we can master your audio assets before you use them in your project.

Don’t hesitate to contact us for more details on our services and our very accessible pricing.

Sound librarians and media publishers

Suppose you are a sound library producer, a sound library publisher or store owner, or a stock media publisher. In that case, you can take advantage of AuthorNorm™ to ensure that the content you produce or publish has the best loudness standard for your creator customers.

We offer AuthorNorm™ as part of our Audio for Media Mastering services for both sound librarians and media producers. Don’t hesitate to contact us for more details on pricing and white-label options.

Software developers and music instrument creators

Perhaps you are a software developer creating audio or media tools, embedded applications, or audio tools and music instruments (virtual or not), and you need a protocol to ensure that your users get the most natural experience while they use your product or you want the audio content of your product to have intuitive and natural loudness and dynamic range.

We can help you implement the AuthorNorm™ protocol in your application, or we can master your application’s content using AuthorNorm™.

Please get in touch with us to discuss options.

Producers and service providers

Suppose you are an audio producer or a production studio lead and would like to offer AuthorNorm™ as a service to your clients or ingest your content using AuthorNorm™ to accelerate your production rate.

In that case, we can train you and share our research and protocols so you can start using the most natural loudness control protocol in your production pipeline.

Please get in touch with us to discuss options.