Hi! This is Antonis from SoundFellas and this is my first EVER dev diary entry. Please be gentle...

First of all, allow me to introduce myself. I've been a part of SoundFellas for 10 years now, started as a sound designer, and I’m currently multiclassing as an Audio Producer and Community Manager.

Today I’m going to write about a little experiment we conducted during the development of Echotopia, our soundscape designer application. While I wasn't the scientific mind behind this experiment, Pan Athen was; this experiment was of particular interest to me, being the audio production lead here at SoundFellas, as it's about how to develop a sound classification system for our application. This topic is very important to the professional circles of sound design but as technology advances, it becomes more and more relevant to the general public.

During the past six months, we were gathering data for an experiment on tagging sound content with keywords. Our vision is to create a user-centric sound classification system, so a vast library of sounds can be tagged, found, and easily shared within a community of users.

We had good participation from our audience, and now we have the results.

The experiment

The experiment was about how people group various types of sound events into bigger categories.

This was necessary, because one of the main features that any audio editor or player has to have, is a quick and easy way to find the audio content. Software developers use many systems to make the life of the end-user easier on that matter.

The three most common systems to organize content are:

- Classic tree hierarchies we see in file browsers

- Simple search fields in strategic places on the user interface

- Using tags to label the objects for easy search.

Of those three, the best when it comes to audio assets and the one used most by professional audio editors is tagging.

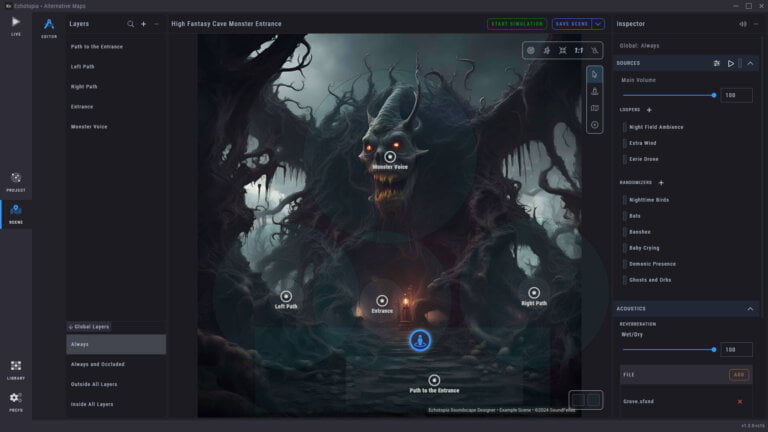

So, for our upcoming soundscape designer application Echotopia, we decided to make this the main way to find sounds.

But together with a solution, usually, come questions.

Tagging system considerations

Tagging is not to be taken lightly by the developer.

First of all, if you want to create an application with an open philosophy so that people can freely create new things using it, you can’t have a completely open tagging system, because when the community starts sharing content, it will eventually lead to a chaotic tag list, that will make it impossible to find all sounds related to a category. i.e. imagine each creator that made a sound preset of a car, tagging it using different words (car, automotive, semi, truck, sedan, etc.), and then someone imports all those in a project ending up with all those tags in the system describing the same thing.

If you don’t believe it, go ahead and check the absolute state of chaos the sound effect library providers are in right now, and the trouble this is causing to the library users.

The second reason that tagging should be designed with care, is that if we choose a defined tagging system so that it promotes openness and easy content sharing, we need to ensure that the highest level of the taxonomy includes all categories the users need to label their content. Sure, you can always put a “Misc” or a “Various” top folder, but if your system is not designed well, the Misc category might end up holding 50% of all the content. This is the equivalent of how many folders titled “New Folder” you may have in your hard drives if you don’t take care of the naming at the beginning (we’ve all been there).

What we did

So we used the scientific method to understand the way our users liked to organize sounds in their minds. The experiment type we used is called “Card Sorting”. The fun thing about card sorting is that nobody has the same idea of organization, but analyzing the results can give you a very good picture of the systems you need to create.

In our experiment, we gathered 30 board game players and DMs, gave them a fair amount of words describing sounds, and asked them to put them into groups that they created and named. So the users showed us their idea of which categories a sound taxonomy should have, and which sounds belong under those main categories.

Examples of user answers

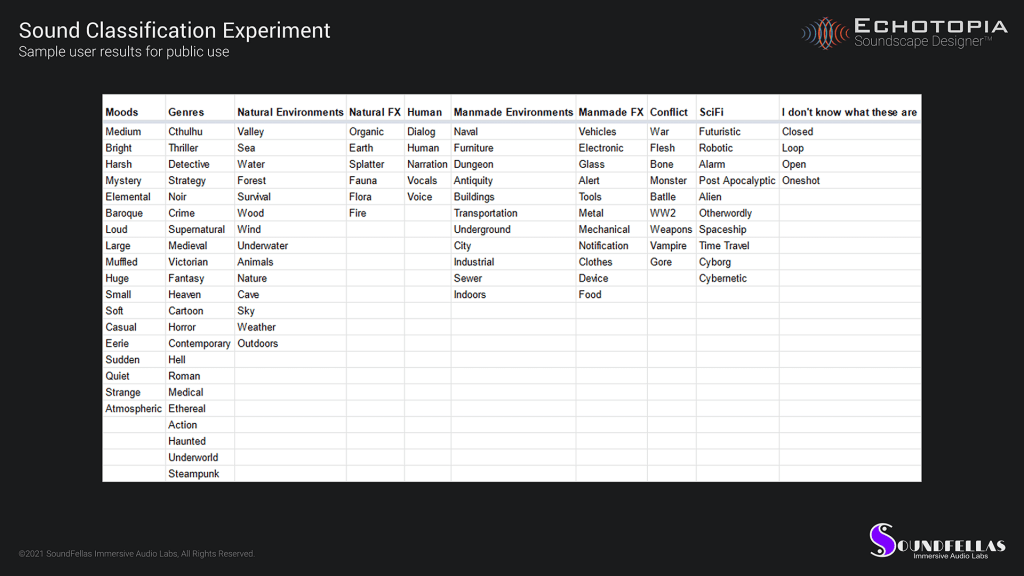

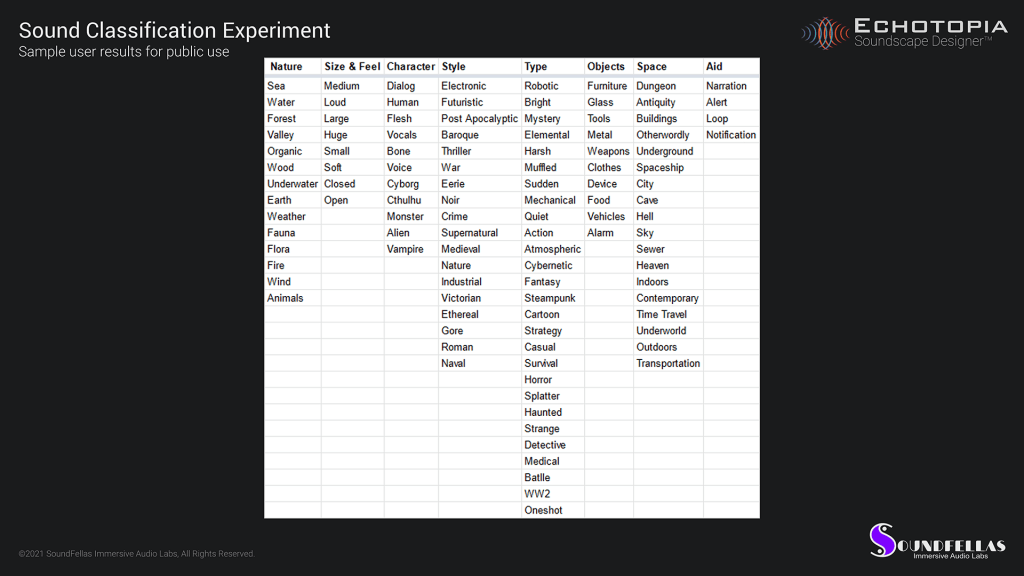

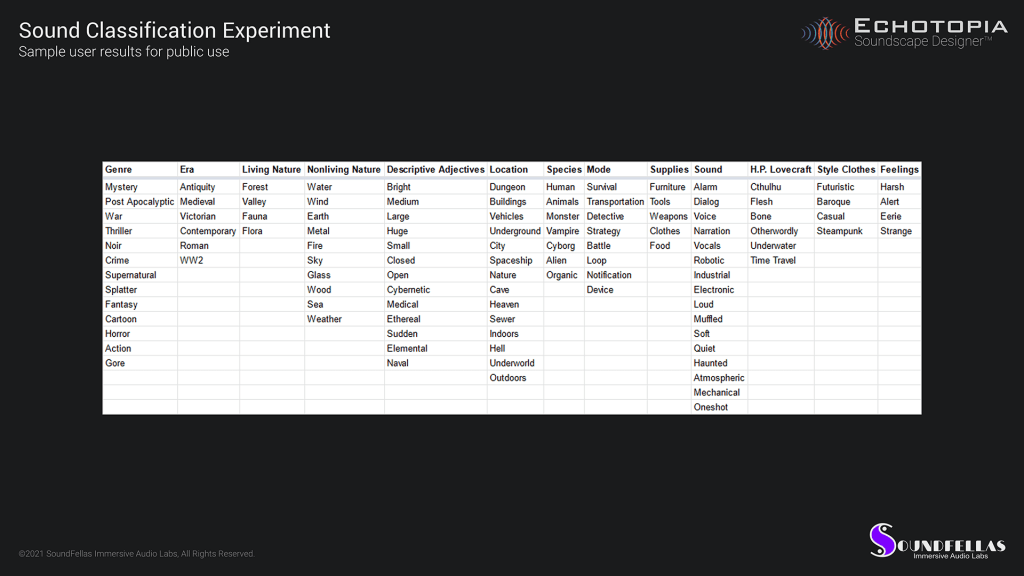

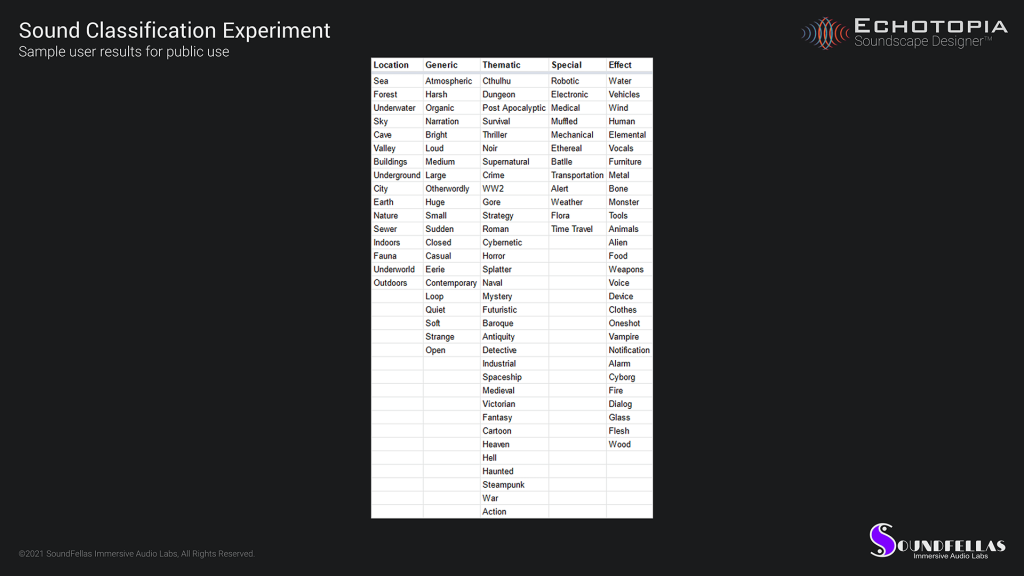

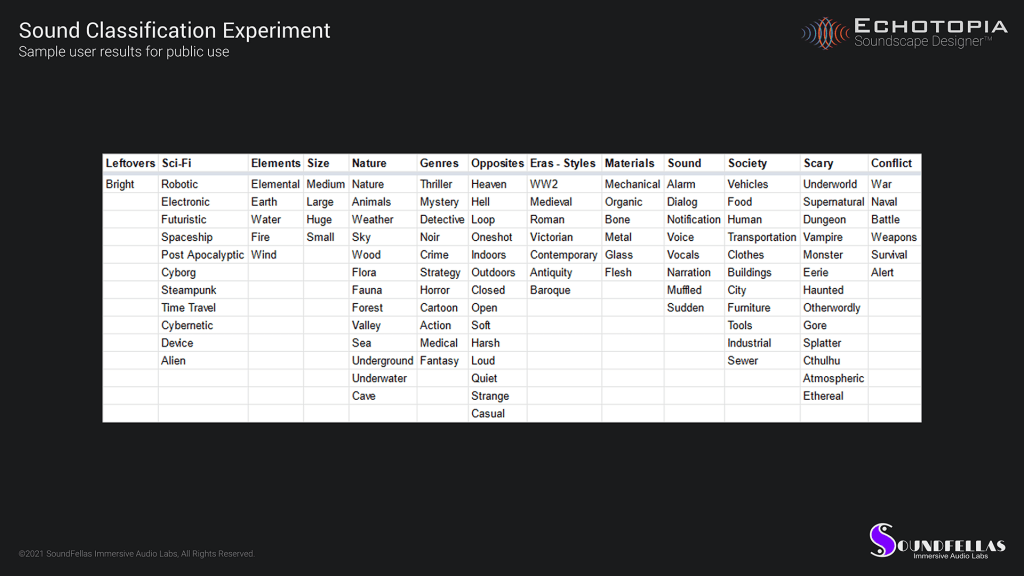

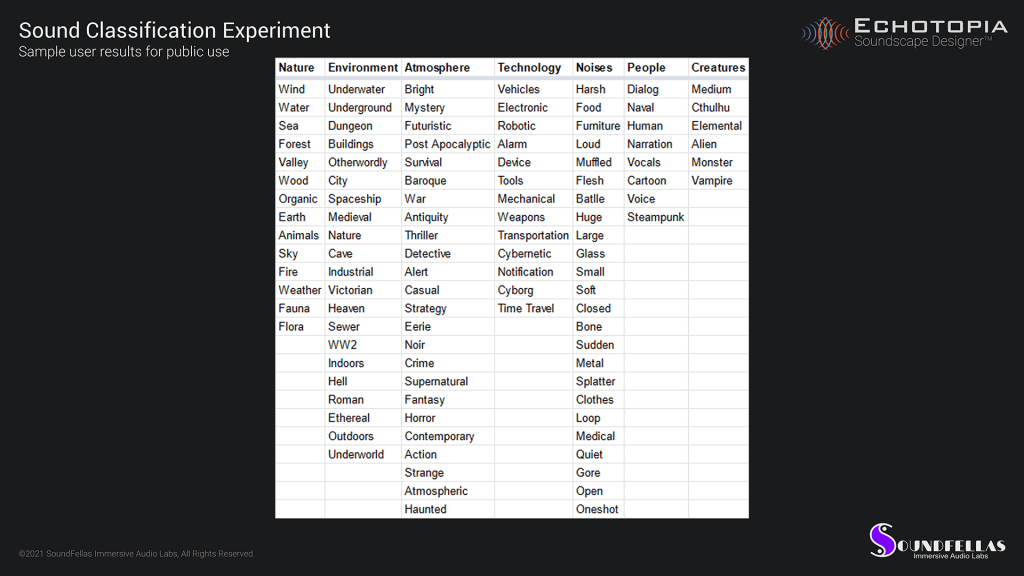

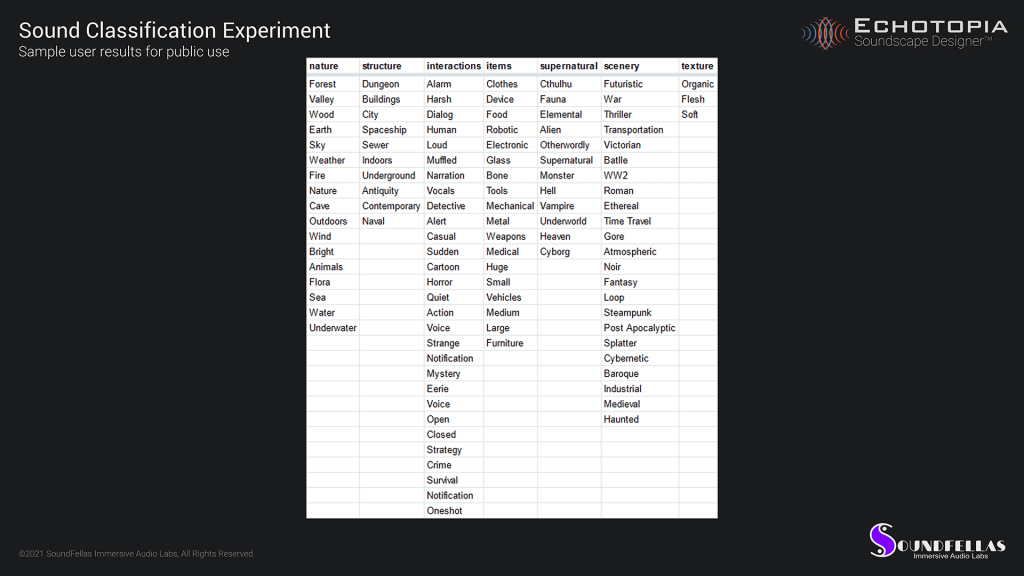

Here is an image carousel featuring some of the user answers we got:

As you can see there is much variety. No two answers are the same.

Conclusion

The good news is that this experiment gave us a clear picture of the final taxonomy that we would use in our tagging system so that all Echotopia users get to enjoy an intuitive and fast way to work with sound in their stories.

Taxonomy was one of the bigger questions we had to answer when designing Echotopia, together with the audio content technical specifications (such as format, encoding, resolution, etc.), and scalability to high channel count formats such as Dolby Atmos, but more on those in later posts.

Now, the taxonomy specification has been passed to Manolis Benetos, our software architect, so expect to hear from him soon regarding the further development of our taxonomy system, and the integration to our code. I’m currently working on the material that will be included as default together with Echotopia, and I’ll be sharing some juicy morsels with you soon.

You can find out more about Echotopia here.